From LLMs to enterprise-grade agents

Agents are fast becoming the front door to your brand: they talk directly to your customers, guide their purchases, manage their subscriptions, fix their issues. And people’s expectations are sky-high: the conversation must feel natural, on-brand and grounded, with no awkward pauses or interruptions, and no hallucinations.

But the large language models (LLMs) used to build agents are non-deterministic — the same input can deliver different outputs — and so creating consistently high quality customer experiences is hard. In addition, the questions people ask businesses, and the way they ask them, are pretty limitless, and prompt injection and other types of abuse are increasingly common.

Sierra’s Agent OS enables businesses to create production quality agents that can handle whatever is thrown at them, while reliably and responsibly representing their brands.

The hard part

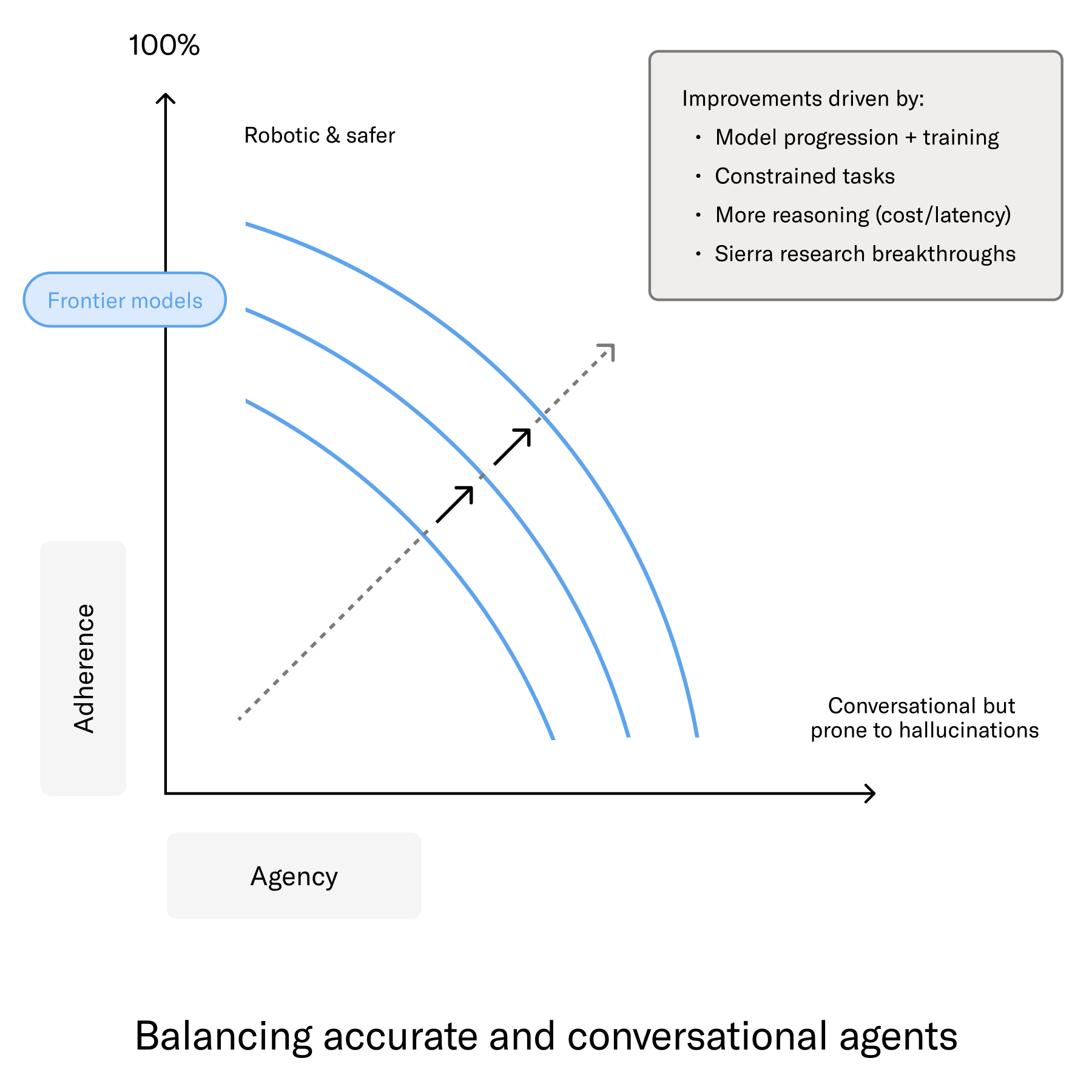

It takes art and science to build an enterprise grade agent — enough direction to complete complex procedures, but not too much that it feels robotic. You also need to minimize latency, especially with voice agents.

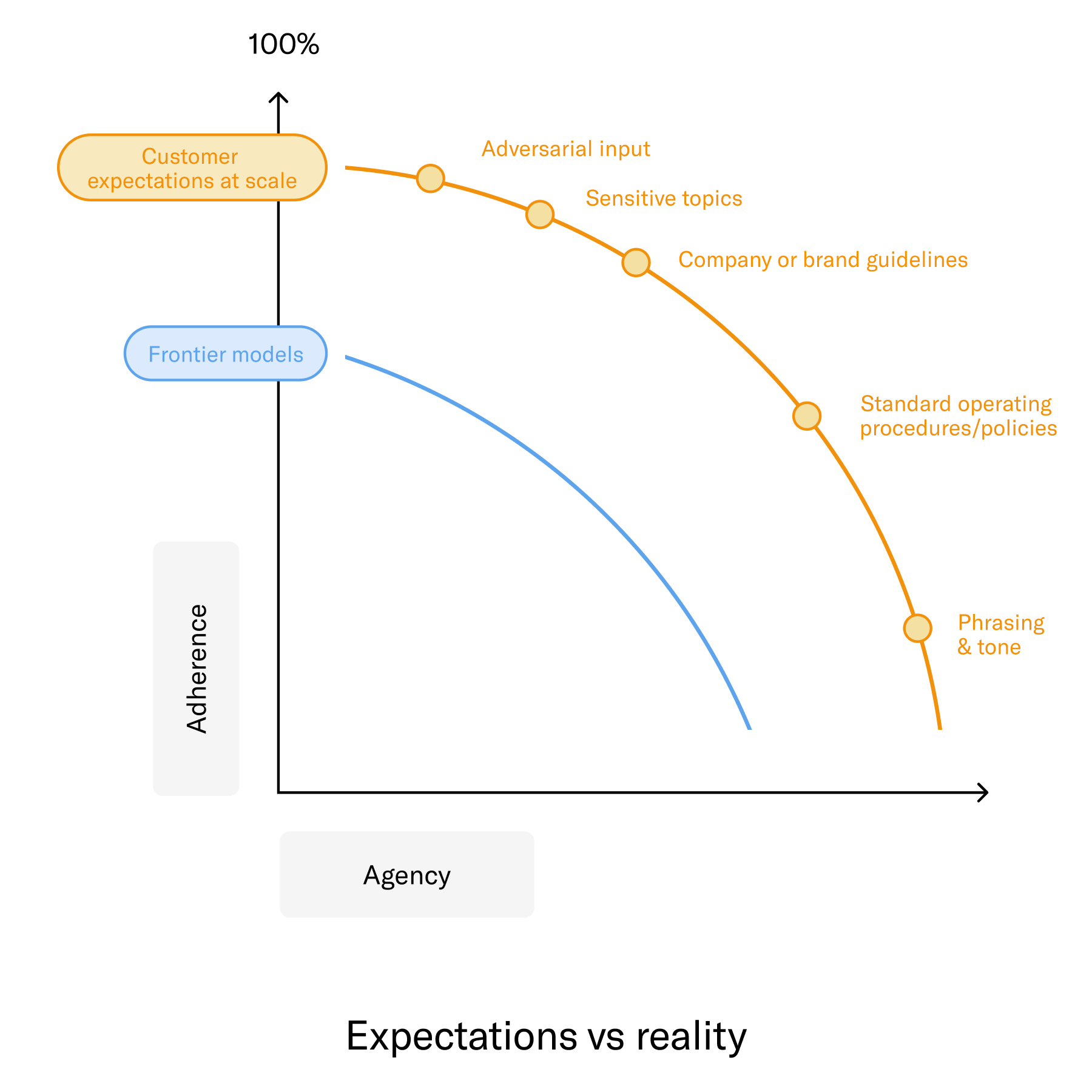

This means pairing high level context and clear goals with hardened guardrails to address specific scenarios, as well as an understanding that all instructions are not created equal. For example, an agent should have much less autonomy on sensitive topics or when someone is trying to exploit it than run of the mill questions.

Embrace the problem

Many companies are tremendously excited about agents but not always aware of the challenges of building directly on LLMs. For example, the need to manage hallucinations, latency, and integrations. At scale, a one-in-ten-thousand hallucination rate is a daily occurrence. Sierra's Agent OS produces stable, reliable agents and enables companies to improve them over time.

The key is shifting the goal from “perfect adherence” to bounded error rates, so the challenge becomes a systems problem, not whack-a-mole. You can then break down the different types of instructions into buckets, each with separate solutions:

Instruction type and tolerance

| Instruction type | Tolerance and guidance | Example |

|---|---|---|

| Adversarial Input, Sensitive Topics, Illegal Activities | Lowest tolerance, maximum adherence, OK with robotic responses | Prompt injection, fraudulent communications, questions the agent should not try to answer |

| Brand Guidelines, Sensitive Policies | Lower tolerance, requires high adherence but with nuanced responses | Agents shouldn’t over-react to comments about competitors but deftly navigate them |

| Standard Operating Procedures or Policies | Medium tolerance, adhere to the spirit of policies, but maintain flexibility | An agent needs to collect an order number, but asking a frustrated customer to provide a number pedantically might not always be the best approach. |

| Phrasing, Tone | Medium to high tolerance, conversational yet respect the company’s tone | Light and jovial topics, maintaining formality and precision but with leeway to respond to changing circumstances. |

Reducing error rates

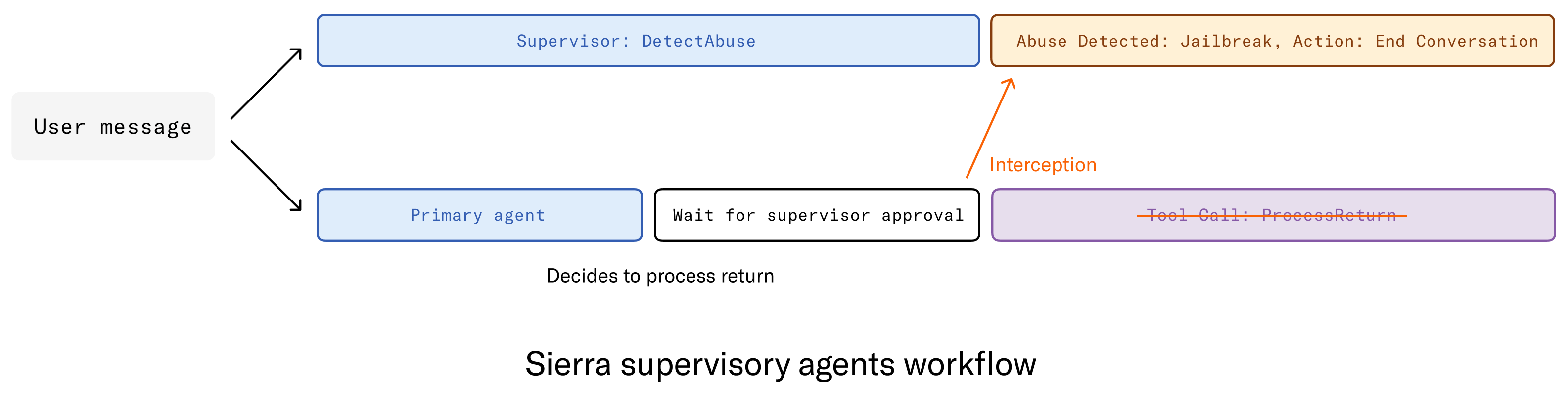

Supervisory agents: Every production agent built on Sierra is managed by several supervisory agents, which ensure they stick to the right policies while also remaining flexible. These “Jiminy Crickets” play different roles and use different LLMs depending on the task at hand, ensuring that:

- Each supervisor’s role is well defined.

- The level of agency associated with each supervisor can be controlled individually.

- Each supervisor can be evaluated and improved independently.

Input filtering: We have a supervisor agent dedicated to detecting and intercepting threat vectors in the user’s input. In the past few months, its accuracy and the range of attacks it can detect have improved significantly — everything from multi-turn context poisoning to advanced jailbreaking and subtle gray area content.

These improvements are rolling out to all Sierra agents, without customers having to do any additional work. We’re also improving auditability and explainability — so agent builders can more confidently review, understand, and refine safety outcomes.

Output interception: We also have supervisors to audit an agent’s every action and response, checking for behavior that’s not compliant with policies or harmful language. For high‑risk topics, supervisors can switch from observe to intercept. If the fix would materially change the meaning or add too much latency, the supervisor can take additional action, like escalating or ending the conversation.

Handling nuance

Appropriate responses: Obvious attempts to break agents are easier to handle, and it’s usually fine if the agent overcorrects. However, that’s not always needed, and with recent updates to Agent OS, supervisors can now take more subtle actions — for example guiding an agent to steer around a reference to a competitor's product (versus ending the conversation entirely).

Detecting underspecification: While agents should be generally helpful, it’s also important to detect and intervene if the agent is in a sensitive situation with insufficient guardrails. As these are not always clear, Agent OS combines supervisors looking for ambiguous situations with filters for highly sensitive topics to intercept conversations when needed.

Constant improvement

Detection and monitoring: In addition to these in conversation safety mechanisms, we offer post turn and post conversation reviews — combining fast, high recall detection with slower, high precision reasoning so the system automatically surfaces and logs defects. Businesses also use manual reviews of real conversations and red teaming exercises to find defects.

Evaluations: We continue investing in improved evaluations so agents can manage challenging interactions with empathy, tact, and a clear understanding of the relevant policies. This includes both evaluations based on real-world situations and synthetic ones such as 𝜏-Bench, as well as simulations that test an agent’s behavior before it ever speaks to a customer.

These complex, purpose-built supervisory models ensure that agents both stick to their safety requirements while also having the autonomy to handle the twists and turns of everyday interactions. And all without any additional effort on our customers’ part. The result: high-performing agents that represent their brands with care and accountability.